Have you ever woken up to find your Power BI refresh failed overnight—again? If the culprit is duplicate keys, you’re not alone. This common issue can silently sneak into your model, especially when working with user-maintained data sources like Excel.

The good news? You can automate the detection and notification process so that stakeholders are immediately alerted and can take corrective action.

The Challenge

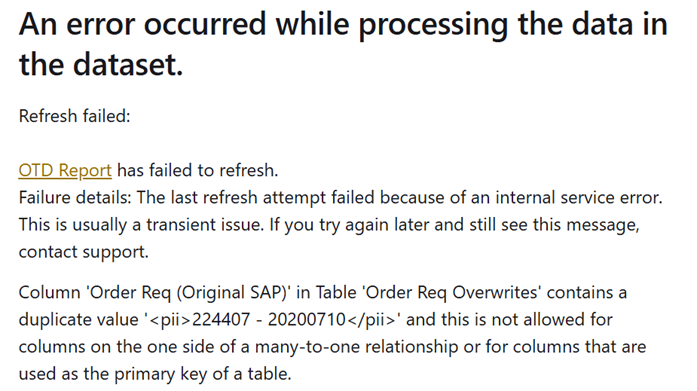

In Power BI, tables on the one-side of relationships (e.g., Product, Customer, Employee) must have unique keys. If duplicates sneak in, the refresh fails, and Power BI logs an error message that points to only one example of the offending value.

While databases typically enforce uniqueness, real-world data isn’t always so neat. Take this example:

- An international logistics company tracks deliveries in an Excel spreadsheet. Local teams enter data manually, but sometimes the same delivery number gets entered twice. The report expects unique delivery numbers, but duplicate entries cause the refresh to fail.

Diagnosing the Issue

If you encounter this error, your first instinct might be to check the source data manually. You could:

- Query the source system (or Excel file) directly for duplicates.

- Try refreshing in Power BI Desktop— disabling the relationship temporarily to allow the refresh, then building a table visual to identify all duplicates.

But manual troubleshooting isn’t scalable. What if you could set up an automated system that detects duplicates and alerts the right people before the refresh fails?

The Solution: A Secondary Report & Automatic Alerts

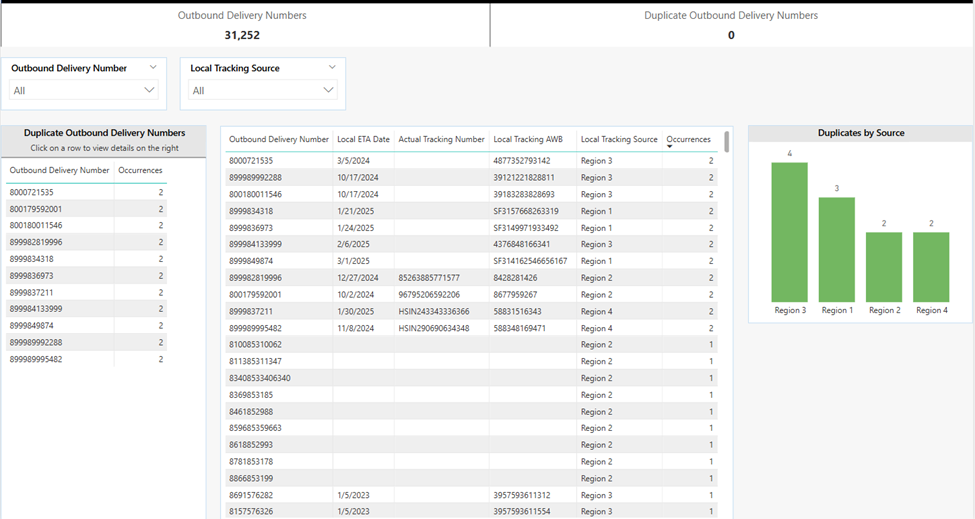

A practical approach is to create a secondary Power BI report dedicated to tracking duplicates. Here’s how it works:

- This secondary report is refreshed independently—even when the main report fails.

- A measure counts duplicate keys. If the count is greater than zero, an alert is triggered.

- Users receive an email notification with a direct link to the report, enabling them to resolve the issue quickly.

Consider this example report, dedicated to helping users visualize duplicate outbound delivery numbers:

Pro Tip: if you architect your solutions using dataflows, this secondary report does not have to redo any ETL logic in the main report.

Setting Up Alerts with Activator in Microsoft Fabric

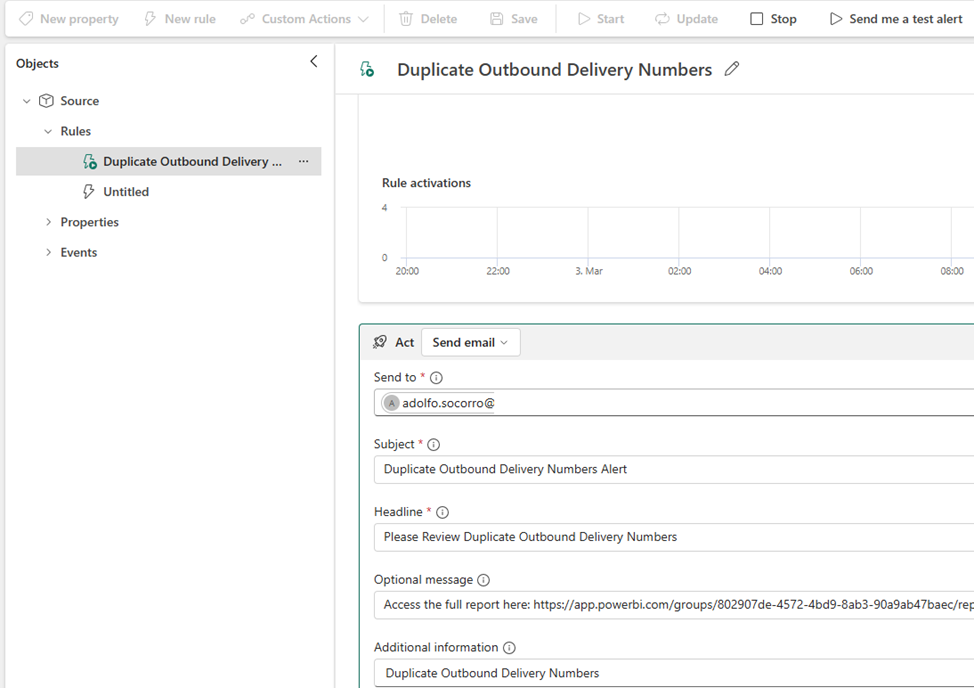

One way to automate this process is by using Activator, a no-code tool in Microsoft Fabric for automatically taking actions when patterns or conditions are detected in changing data.

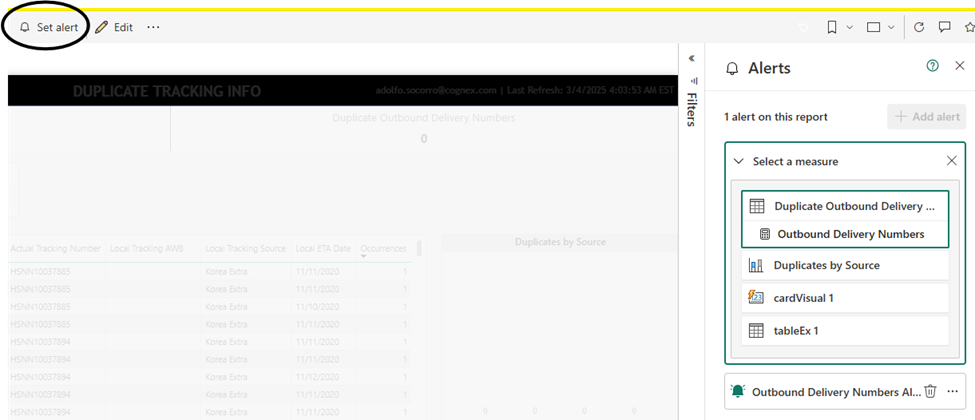

Here’s how to set up an alert in Activator:

- Open the secondary report in the Power BI Service.

- Click on “Set Alert” in the toolbar.

- Choose the trigger condition—in this case, a measure that counts duplicate keys.

- Set an action—send an email alert when duplicates are detected.

Note that the optional message includes a link to the report.

For the details on Activator, please refer to the official Microsoft documentation.

Alternative Alerting Methods

If you’re not using Microsoft Fabric, you can achieve similar functionality with:

- Power Automate—Create a flow that queries the secondary report for duplicates and, if found, sends an email notification.

- Power BI Dashboard Alerts—Set threshold-based notifications on card visuals sourced from the secondary report.

Closing the Gap Between Failure & Fix

With this automated approach, you reduce the time between a failed refresh and corrective action. Instead of discovering issues reactively, users receive instant alerts and can resolve duplicates before they impact reports.

By implementing this proactive strategy, you ensure your Power BI semantic models stay healthy—even when working with messy real-world data.

Other Considerations

Proactive Duplicate Prevention

Instead of only reacting to duplicates, prevent them from happening:

- Push data validations to the source system, if possible.

- For Excel-based sources, use Data Validation Rules or Conditional Formatting to prevent or highlight duplicate keys.

Performance Considerations

If you’re checking for duplicates in a very large dataset, the secondary report itself might slow down or become inefficient. To mitigate:

- Offload duplicate detection to the source system.

- If the source is SQL-based, create dedicated queries to detect duplicates.

Final Thought: Turning This into a Reusable Framework

Once you build a system like this, could it be templated and reused across multiple reports? Could it be expanded to perform other data-integrity checks beyond duplicate keys? For example:

- Standardizing a “Data Health Dashboard” that all teams use.

- Creating Power BI admin reports that track multiple datasets across an organization.

- Using a Power BI Dataset Refresh Monitoring Solution to flag all failures, not just duplicates.

This does not have to be just about fixing one report—it could become a repeatable data governance strategy.

How do you handle data integrity challenges in Power BI? Let’s discuss!